How Confident Are You, ChatGPT?

Published:

TL;DR: I present reasons to doubt that the key to OpenAI’s IMO gold was a universal verifier. Instead, I argue that uncertainty estimation and calibration are central to this research direction and likely to shape future OpenAI products.

Recently, both OpenAI and DeepMind entered their models in the International Mathematical Olympiad (IMO) competition in 2025 and achieved gold medals by correctly solving problems 1 through 5. Problem 6 remained unsolved by both models.

Interestingly, the solutions were written in informal natural language, not in formal systems like Lean. The latter approach—used by Harmonic’s Aristotle Gold and Bytedance’s Seed Prover Silver—has an important advantage: Lean's compiler provides verifiability. Informal reasoning risks hallucination.

Yet the fact that OpenAI and DeepMind produced correct solutions, later verified by human experts, highlights something important: progress in supervising tasks that are hard to verify.

Noam Brown (one of the three OpenAI employees who worked on the IMO project) said in an podcast with Sequoia’s Sonya Huang:

“We observed progress on hard-to-verify tasks, which was a departure from our previous focus on verifiable rewards, and this improvement is what excited us. [...]

Initially, when language models came out, the challenge was getting them to reason.

Once they could reason, the next challenge was getting them to reason on hard-to-verify tasks.

Now that they can reason on hard-to-verify tasks, I think the next hurdle will be getting them to come up with novel questions.”

Some speculated that OpenAI built a universal verifier—an LLM capable of judging outputs across domains. But such verifiers are imperfect, just like the proving model itself. This opens the door to reward hacking: the model learns to fool the verifier rather than solve the problem.

Lean, by contrast, is a limited but perfect verifier in its domain: it requires formal language, can only be applied to mathematics, but the resulting reward signal is always correct.

In what follows, I present arguments that calibration —not a universal verifier—belongs at the center of recent progress.

Calibration, Not Just Verification

Calibration refers to how well a model’s predicted confidence scores match the actual likelihood of being correct. A well-calibrated model that says it’s 80% confident should be right about 80% of the time.

Overconfidence, by contrast, means outputting an answer with high confidence that is actually wrong. Hallucination is a result of overconfident models. Researchers from FAIR at Meta found that reasoning models trained with RL tend to become overconfident after RL training.

The initial idea for my hypothesis that this technique is what is behind OpenAIs progress on hard-to-verify tasks came when seeing this post of Alexander Wei on X:

And actually after listening through the podcast again, it seems that - even though they make a strong effort to stay vague and not reveal too much about the underlying technique - uncertainty is all over the conversation (and also in the GPT5 launch, more later)!

As Brown notes:

“We allocated significant computational resources to problem six, but it was encouraging to see that the model didn't attempt to fabricate a solution and instead acknowledged its inability to solve it.

While it's somewhat disappointing to see the model work so hard only to conclude it has no answer, it's reassuring that it can recognize its limitations.

This level of self-awareness in the model is remarkable, especially considering that earlier models would often try to be helpful by generating incorrect answers.”

One of the intriguing aspects of these models is their ability to express uncertainty or confidence in natural language throughout the problem-solving process, even when the proofs themselves are beyond my comprehension.

The model uses words that indicate its level of certainty, such as frequently saying "good" when confident or using numerous question marks when unsure.

It's interesting that I can follow the model's thought process and gauge its confidence in its progress, even if I can't determine the correctness of its solutions.

You often encounter the dreaded "seems hard" response.

That occurred on problem six.

The model frequently expressed difficulty.

It would respond with phrases like "No progress," "Hard," "Seems hard," and "Keep going."

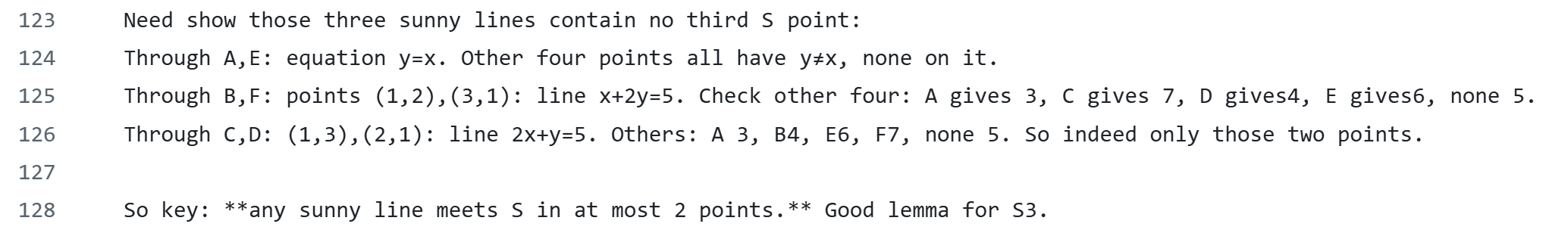

These words can indeed even be observed in the outputs OpenAI has released here. And here we can see strong evidence against the universal verifier theory, because it does not seem that the model has access to a verifier, but instead the verification was absorbed into the model itself.

RL for Uncertainty Training

My hypothesis is that the team saw that the model initially showed a promising ability to estimate its own confidence in its reasoning, which led them to explore ways to bring out this behavior in postraining with RL. This involved developing a novel RL setup: rewarding the model for correct answers with high confidence, and penalizing it when it confidently produces incorrect responses. A recent paper by researchers at MIT explores exactly such an RL calibration approach in more detail. This training then likely encouraged the model to engage in deeper reasoning, developing an implicit internal verifier resulting in these observedartifacts like "Good" or "Seems hard" in the outputs.

Parallel Thinking and Multi‑Agent Systems

Another crucial factor in solving the IMO for both OpenAI and DeepMind was scaling up reasoning through parallel computation. This was particularly important as both teams aimed to solve problems under the same 4.5-hour time constraint as human contestants.

However, since models can run multiple processes simultaneously, comparing against human time is somewhat misleading—what truly matters is the total compute used, a detail neither team disclosed.

On the matter of parallel thinking DeepMind highlighted this in their statement:

“We achieved this year’s result using an advanced version of Gemini Deep Think [..] that incorporates some of our latest research techniques, including parallel thinking. This setup enables the model to simultaneously explore and combine multiple possible solutions before giving a final answer, rather than pursuing a single, linear chain of thought.”

OpenAI, on the other hand, emphasized the role of multi-agent systems:

“I believe both of you are on the multi-agent team. Please help me understand the role that multi-agent systems play in this process.”

“In addition to having the model think for extended periods and make progress on difficult-to-verify tasks, this also involved scaling up parallel compute, which has a multi-agent component. While we cannot go into too much detail about the exact techniques, this was certainly one way we were able to scale up test-time compute for the IMO. Regarding the multi-agent and parallel compute scaling, we prioritized generality in our techniques.”

The challenge with parallelization is to avoid overconfident hallucinations from multiple agents. Without proper calibration, this makes it difficult to select the correct answer. But with the method of confidence training we mentioned it, the model would instead either withhold an answer until it's sure, or output a confidence score that helps rank candidate solutions—enabling better use of parallel compute by choosing the highest-confidence result.

Speculations

More advanced setups may use a Manager–Worker architecture: one agent proposes diverse proof strategies which are then distributed to workers for development. This encourages exploration and reduces duplication between workers. In a video by Google DeepMind the mathematician Michel van Garrel with access to the model states:

“When I was thinking about solving that question, I was thinking about three different things,

three different ideas,

but it seems that that DeepThink was thinking about 20 or 100.”

In OpenAI's case, model behavior suggests a modular strategy: problems are broken into lemmas, which are solved independently. Agents could then share proven subparts, allowing others to compose a full proof (see DeepMind’s comment on “combining multiple possible solutions.”)

GPT‑5 and the Push for Reliability

Uncertainty estimation seems to also have played a role in the recent GPT-5 release. From the recent GPT-5 announcement:

"We’ve particularly invested in making our models more reliable when reasoning on complex, open-ended questions [...] across all of these benchmarks, “GPT‑5 thinking” shows a sharp drop in hallucinations—about six times fewer than o3—marking a clear leap forward in producing consistently accurate long-form content."One big selling point of GPT-5 seems to be its reliability which makes it suited for applications in business like healthcare.